MaNIS Interface Project

Assignment 4 - Low-fi Prototyping and Usability Testing

Contents

Introduction

The MaNIS application (Mammal Networked Information System) is a network of distributed databases of mammal specimen data. The project is a collaboration between 17 research institutions and natural history museums, funded by the National Science Foundation. MaNIS makes information available for nearly a million museum specimens. We are developing a prototype for a user interface for the MaNIS application.

The purpose of our testing was to determine if users would understand how to use the interface without any training or explanation. Primarily, we wanted to make sure that they could accomplish realistic searches of the MaNIS database using a pre-defined set of search criteria such as taxon, location, date, and curation. Secondarily, we also wanted to observe if users would employ various utilities to manipulate or view search results such as sorting, printing, exporting, and limiting fields. Where possible, we have designed our prototype using the Flamenco model of combining browsing and searching, and we wanted to see whether users would intuitively understand this model.

Prototype

We created a paper mock-up for our prototype. The mock-up was constructed with simple materials: paper, pen and pencil, and Post-it notes. Most of the UI controls were hand-drawn. Despite the simplicity of the materials, the prototype simulated most of the functionality of the interface in detail. We simulated real results from database searches by downloading selected result sets from MaNIS into Excel and printing them out.

The prototype consisted of a search criteria panel on the left, a search results panel on the right, and a navigation panel at the top for switching between the search criteria panels. As users navigated through the user interface, we would overlay new panels to represent the current state of the interface. Users could ?type? in text boxes by writing with a pencil, and they could ?click? on buttons and links by tapping them with their finger.

Method

Participants

All of our participants were selected because they represented different types of users who would use MaNIS in the course of their work: scientists, curators, and consultants.

First participant: a tropical ecologist and freelance popular science journalist with a scientific publications record on small mammals, monkeys, and bats.

Second participant: an assistant in the University's natural history museum and a graduate student in zoology - the PhD studies focus on behavior in a small mammal.

Third participant: a wildlife biologist who works for a small environmental consulting firm.

Task Scenarios

We used the following scenario with all of our users, and designed it to ensure that each task touched on a main feature of the interface:

You need to review the taxonomy of the Hairy-tailed bats, genus Lasiurus, as a foundation for ecological fieldwork observations in California. You?re also interested in any information about the changes in the bats' ranges in the last 80 years. By examining DNA from museum specimens and the ones you catch in the field, you hope to be able to say something about the robustness of the hairy-tailed bat population's genetic diversity.

Task 1: Get a count of available specimens for Lasiurus. To be sure to capture all specimens, include all appropriate synonymies for Lasiurus in your search.

What we looked for: Can the user tell how to specify the search criteria (by choosing the Taxon link)? Do they notice that synonymies are included in their search by default?

Task 2: Narrow your results to specimens collected in California since 1920.

What we looked for: Does the user understand the relationship between the right and left panels?that the changes they make to the search criteria panel immediately affect (and narrow) the results panel? How will they interact with the results panel?

Task 3: Identify specimens which will potentially provide good material for DNA studies.

What we looked for: Will the user click on the Curation link to set this type of search criteria? Will the user select from the list of available preparations?

Task 4: In order to download the results, specify that the results include only the following information:

- Institution

- Catalog number

- Field notes

- Preparation

- Collection locality

- Collection date

What we looked for: Will the user be able to figure out where to set these criteria? Will they understand how to manipulate the results table? Will they take advantage of the shortcuts made available?

Task 5: Download the results. You will use these to contact the collections? curators about specimen loans or a visit.

What we looked for: How will the user expect the results to be downloaded? Will they be satisfied with the operating system download facility or need a different interface?

Procedure

We used approximately the same procedure with each participant. We set aside 1.5 hours for each session. One team member acted as the facilitator, explaining the purpose of our project and of the user interface testing. The facilitator also responded to the user?s questions, some of which were deferred until the end of the session. One team member acted as the ?computer,? replacing the various panels and labels of the paper prototype as needed. The two other team members took detailed notes on the process.

We asked the participant to complete each of the steps listed above, giving them as little explanation as possible, and asking them to think aloud as they worked through the tasks. At the end of the session, we answered any questions the participant asked and discussed problems encountered during the session. We also had a more general discussion about how they might use MaNIS in their work.

Test Measures

- Is the interface intuitive?

- Can users get started without any explanation of the interface?

- Is our terminology understandable?

- Do the main groupings of search criteria make sense?

- Can users find all of the functionality that they are looking for?

- Do users understand all of the user interface controls that we?ve provided?

Results

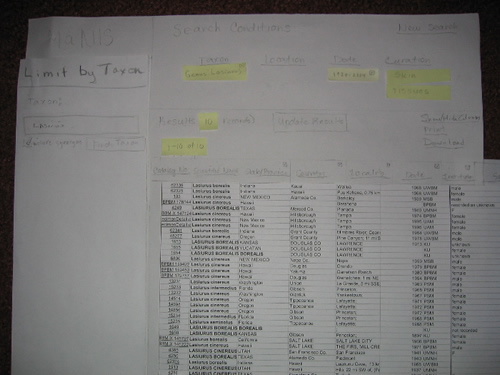

Limit by Taxon

| + | Always knew to click Taxon hyperlink to find a specific species. | |

| + | Liked that search conditions arranged in order of importance with Taxon always first in the list. | |

| + | Liked the "Did You Mean" feature for typing errors and misspellings. | |

| - | Finding taxon by hierarchy requires that the user have an intimate knowledge of Order, Kingdom, Family, etc. which defeats the purpose of the search. | medium severity |

| - | Didn't notice "include synonyms" feature. | medium severity |

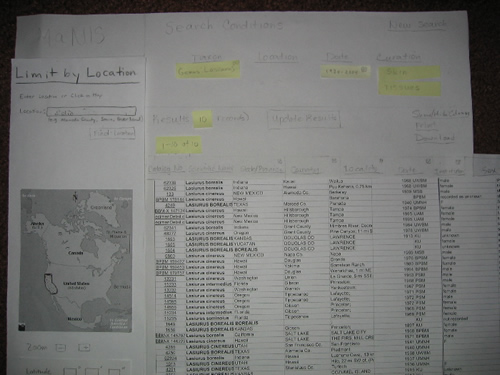

Limit by Location

| + | Liked mapping tool. | |

| - | Kept clicking on the sort "Location" hyperlink instead of the search condition "Location" hyperlink. | high severity |

| - | Unclear if Location search condition nullifies Taxon search condition supplied earlier. | high severity |

| - | Unclear how granular a location a user could enter. | medium severity |

| - | Wanted more advanced mapping features. | low severity |

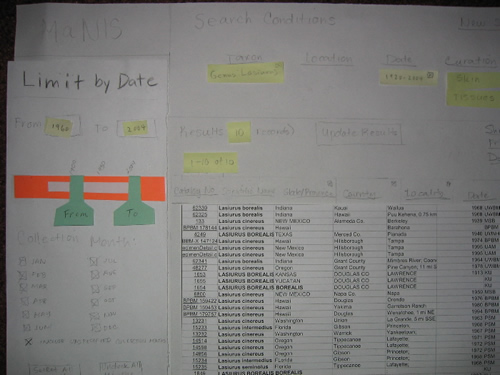

Limit by Date

| + | Sliders were a good tool. | |

| + | Liked pre-population of dates with ranges derived from result set. | |

| - | Didn't remember to click "Update Results" after entering the date search conditions. | high severity |

Limit by Curation

| + | Liked being able to narrow down to specific preparations and museums. | |

| + | Liked that values were defaulted to all preparations and all museums. | |

| - | Unclear on what "Curation" meant. | high severity |

| - | Clicked on the sort "Preparation" hyperlink instead of the "Curation" hyperlink to narrow by skin and skull. | high severity |

| - | Unclear if selecting both skull and skin will limit results to specimen that have both skull and skin and exclude those that have just skull or just skin. | high severity |

Sorting

| - | Had no idea that a sorting feature was available. | |

| - | Kept mistaking the sorting hyperlinks for search condition hyperlinks. | high severity |

Undo Search Condition

| + | Once users were told about the functionality of the "x", they liked it. | |

| - | Had no idea this feature was available. | medium severity |

| - | Didn't know that clicking the "x" would undo the condition. | medium severity |

| - | Ended up back-tracking to each condition and "erasing" parameters. | medium severity |

Add / Delete Fields

| + | Once users were told about the functionality of the "x", they liked it. | |

| - | Had no idea this feature was available. | low severity |

| - | Unclear on what "Add / Delete Fields" meant. | medium severity |

| - | Didn't know that clicking the "x" would hide the column on the table. | low severity |

Navigation Controls

| + | Liked the paging controls that allow navigation through hundreds of records with one click. | |

| + | Liked the record counts that give instant feedback on how the result set is changing as various search criteria are selected. |

Download / Export / Print

| - | Wanted some way to select specific records for the download. | low severity |

"Back" to Previous Page

| - | Wanted to use the "browser back button" to revert to prior pages instead of the "back to results" hyperlink provided on the interface. | low severity |

Discussion

What We Learned

During the course of our three low-fi user tests, our test participants unearthed a wide range of usability issues. Although we were not surprised that our design had flaws, we were amazed at how effective our paper prototype was at eliciting genuine responses and valuable comments from our users. Some of the problems that the users encountered will be relatively easy to fix.

- Because of the confusion they caused, we will most likely remove the "x" (close) buttons that allowed users to delete columns in the results view. Users will still be able to customize their view by using the Show/Hide Columns feature.

- We will also change our interface to give users more control over where their downloaded files are saved and what they are named.

- The last of the easy fixes is to reword the example given on the Location search page. Because the most granular example was a county name, one user thought that county was as low as she could go in entering a location name. This showed us that we need to be careful what our examples tell users.

Some other issues that we will need to discuss for our next prototype are trickier than the ones mentioned above.

- The difference between the Location column sorting link and the Location search link was not clear to users, so we will have to figure out a way to guide the user to what he or she wants. This may involve making each more visually distinctive.

- We will have to clarify what checking multiple boxes in the search criteria does. (i.e. Is it performing an AND operation or an OR operation?)

- In addition, user interaction with the "Update Results" button will have to be examined and improved.

The most serious considerations that our group will undertake include the Taxon hierarchy view and the Curation category.

- We will continue to tackle the question of how to display taxonomic information in order to aid browsing and search.

- Users did not know what the Curation category contained, so we will have to either think about ways to make its contents more apparent or reorganize and categorize the contents entirely.

- We will need to make it clearer that specifying different search criteria narrows the user's current search unless the user begins a new search first.

What the Tests Could Not Tell Us

Clearly, we learned a great deal from these low-fi user tests and we have a lot of work to do for our next prototype. However, because the MaNIS application is data intensive, the tests could not tell us how users would interact with our interface using real data. For example, because we had to prepare screenshots and data beforehand, users had to use the search terms we gave them regardless of whether the terms meant anything to them or not. Also, although the tests revealed that the users liked instant feedback via the changing record count on the results view, it is unclear how quickly this feedback can be rendered in a real session given the large collection of records to be processed.

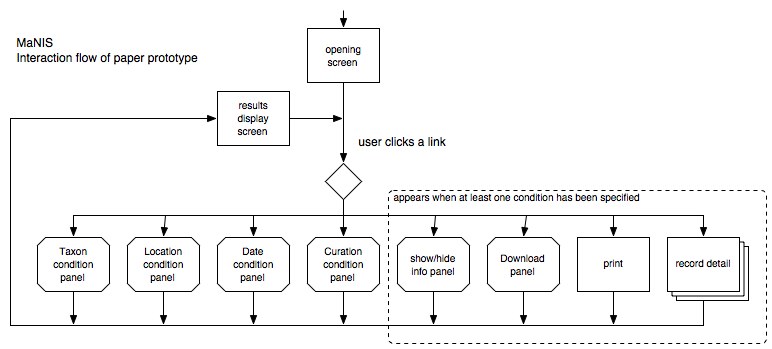

Interaction Flow